Working with Ollama, Part 1

In the first installment of our two-part series “Working with Ollama,” we introduce the open-source, cross-platform solution Ollama, which simplifies both the management and usage of AI models.

Why Ollama?

When developing AI-powered applications, we often face the task of evaluating new or specialized LLMs (Large Language Models) and/or assessing the feasibility of integrating an LLM on a given hardware setup. These tasks can quickly become time-consuming and complex, especially when you need to run models locally or embed them into existing systems.

Ollama is an open-source, multi-platform tool designed to streamline these processes by providing a consistent, developer-friendly environment that makes both using and managing AI models straightforward. In this two-part series, we’ll cover installing Ollama and integrating it with frontend solutions like AnythingLLM. In Part 1, we’ll focus on installation and basic usage.

Installation

Installing Ollama couldn’t be simpler. Dedicated installers are available for macOS and Windows (on macOS they’re even code-signed and run without extra tweaks!), and for Linux the Ollama team provides a one-liner that works across all major distributions.

Since most of our development happens on Linux machines, we’ll concentrate here on installing and using Ollama on Linux. The one-liner downloads and runs an install script:

curl -fsSL https://ollama.com/install.sh | sh

Security-conscious Linux users might raise an eyebrow when the script asks for your password, but rest assured: this comprehensive script—also used to update existing installations—detects your architecture, fetches the appropriate binaries, creates an “ollama” user, installs any necessary drivers and packages (including CUDA for NVIDIA and ROCm for AMD), and configures Ollama to run as a systemd service.

Working with Models

Once Ollama is installed, you can load and run any model from the searchable Ollama repository with a simple command:

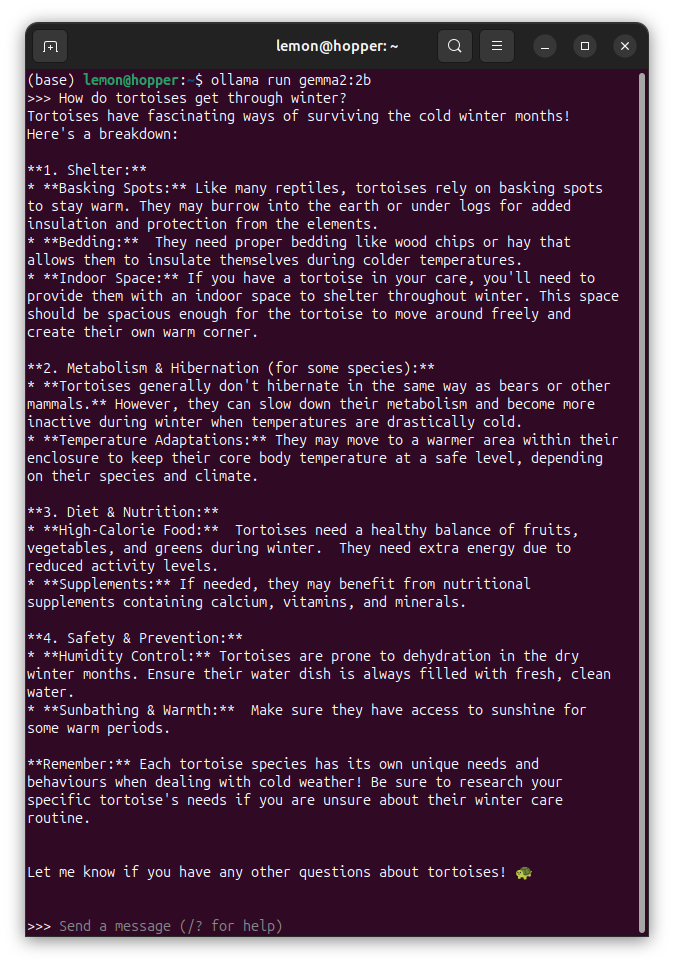

ollama run gemma2:2b

If the referenced model isn’t already on your machine, Ollama will automatically download and install it. You can then invoke the model directly from the command line for quick tests:

While invoking LLMs from the terminal is convenient for experiments, Ollama’s real power lies in its RESTful API. For example:

curl http://localhost:11434/api/generate -d '{

"model": "gemma2:2b",

"prompt":"How do tortoises get through winter?"

}'

…which streams responses as JSON chunks:

{"model":"gemma2:2b","created_at":"2025-01-23T11:22:34.883151191Z","response":"Tor","done":false}

{"model":"gemma2:2b","created_at":"2025-01-23T11:22:34.890568853Z","response":"to","done":false}

{"model":"gemma2:2b","created_at":"2025-01-23T11:22:34.899045495Z","response":"ises","done":false}

{"model":"gemma2:2b","created_at":"2025-01-23T11:22:34.909842313Z","response":" have","done":false}

{"model":"gemma2:2b","created_at":"2025-01-23T11:22:34.917914788Z","response":" some","done":false}

{"model":"gemma2:2b","created_at":"2025-01-23T11:22:34.928657502Z","response":" clever","done":false}

{"model":"gemma2:2b","created_at":"2025-01-23T11:22:34.939221939Z","response":" adaptations","done":false}

{"model":"gemma2:2b","created_at":"2025-01-23T11:22:34.947483663Z","response":" to","done":false}

{"model":"gemma2:2b","created_at":"2025-01-23T11:22:34.958544652Z","response":" survive","done":false}

{"model":"gemma2:2b","created_at":"2025-01-23T11:22:34.966899373Z","response":" the","done":false}

[…]

Clients are responsible for reassembling the streamed output when "done": true.

Great news for developers: Ollama offers official Python and JavaScript libraries to simplify API interactions (as well as unofficial libraries for practically every other language—even Lisp: https://github.com/veer66/cl-ollama ). For example, using the Python client:

from ollama import generate, GenerateResponse

g_response: GenerateResponse = generate(model='gemma2:2b', prompt='How do tortoises get through winter?')

print(g_response.response)

The Python library (https://github.com/ollama/ollama-python) handles streaming under the hood, though you can still work with raw chunks by passing stream=True.

Stay tuned for Part 2 of this series, where we’ll dive into using model files and integrating Ollama with AnythingLLM.