ChatGPT for Teams: Privacy-Compliant Use in the Workplace

In today’s digital business world, AI-powered communication platforms like ChatGPT are essential for tasks such as answering complex code questions or creating top-notch texts for offers.

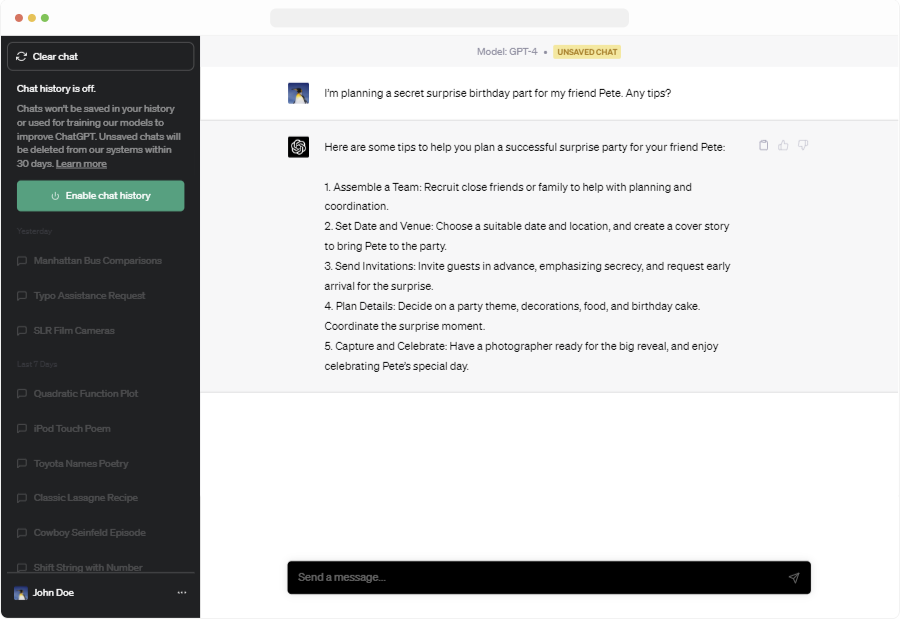

However, in companies dealing with sensitive customer data, using ChatGPT can lead to a data protection dilemma. While ChatGPT offers an option to prevent the use of chat conversations for training purposes, it comes with certain limitations. Moreover, as of June 2023, there is no way to manage multiple team members or users through a company account. Each user must register individually and use their own email, phone number, and credit card. If you want to use ChatGPT+, for example, you cannot pay for all users with one credit card. Individual invoices also end up with individual users, creating an organizational and accounting nightmare.

We at DIVISO have also grappled with this issue and went in search of a solution.

The current solution from ChatGPT

ChatGPT allows you to disable sending data for training purposes in the options. This is done by turning off chat history. Obviously, this means losing the ability to manage multiple chats simultaneously. This can lead to significant restrictions for companies that rely on comprehensive documentation and tracking of their communication. Nevertheless, this option represents a first step towards data protection when using ChatGPT.

ChatGPT(+) and OpenAI API - What’s the difference?

There are two ways to use OpenAI’s offerings:

- ChatGPT / ChatGPT+: This offering is aimed at individual/private customers who only want a chat assistant. ChatGPT is the free offering, which only provides access to Version 3.5 and only when computing capacity is available. ChatGPT+ is the paid version, which is always available and also allows the use of Version 4.

- OpenAI API: This offering is aimed at companies and is actually intended for the development of new products. However, the chat functionality is also available via the API. The API access is intended for business use and allows, among other things, central billing of usage costs.

OpenAI API for more control over data

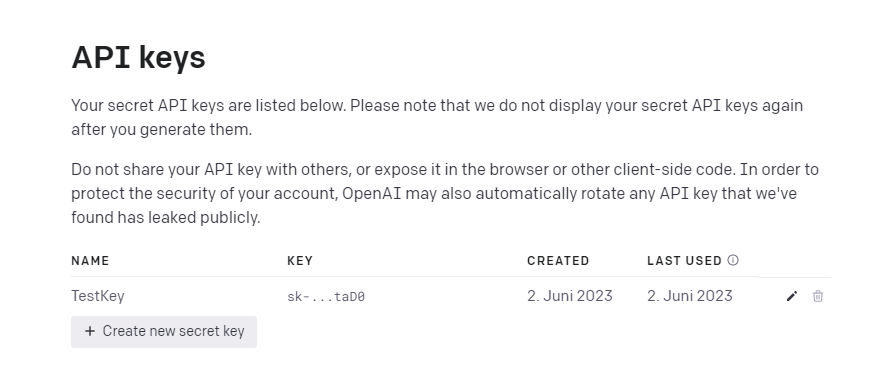

A promising approach is to use the OpenAI API. In OpenAI’s API Data Usage Policies, it states:

“OpenAI will not use data submitted by customers via our API to train or improve our models, unless you explicitly decide to share your data with us for this purpose. You can opt-in to share data.”

This means that data transmitted via the API will not be used for training or improving the models unless you expressly choose to share your data for this purpose.

This allows you to better control which data you share with OpenAI and how it is used. It offers more flexibility and data protection compared to the standard use of ChatGPT.

The solution with “Technologic - AI Chat” (Open Source)

The API does not provide its own user interface - understandably, as it is intended for the development of applications. However, there are many alternative chat frontends that you can use together with chat models like ChatGPT. We chose “Technologic - AI Chat”, which was developed by our friends at Xpress.ai. This chat frontend, based on the Svelte framework, scores not only with ease of use, adaptability, and seamless integration with the OpenAI API, but also offers data protection and usability features that are particularly important to us:

- Secure Storage: Your conversations are stored locally on your computer, thanks to the use of your browser’s IndexedDB storage.

- Bring Your Own API Key: You can easily configure your OpenAI API key or another compatible backend (e.g., xai-llm-server).

- Organized Conversations: You can organize your conversations in folders to keep them tidy.

- Message Editing: You can edit and modify sent and received messages as needed.

- Conversation Branching: You can switch between different topics without losing context.

In addition to these features, “Technologic - AI Chat” offers many more functionalities. For a complete list of features and capabilities, you can visit Xpress.ai’s GitHub page.

Installing Technologic - AI Chat

To install “Technologic - AI Chat”, simply follow the instructions in the project’s README file:

git clone https://github.com/XpressAI/technologic.git

cd technologic

npm install

If you get error messages due to a dependency conflict with the current npm version, it’s no big deal. Just use this command here:

npm install --legacy-peer-deps

Once the installation is complete, start the app with npm run dev -- --open to launch the local Node server and automatically open the app in a new browser window.

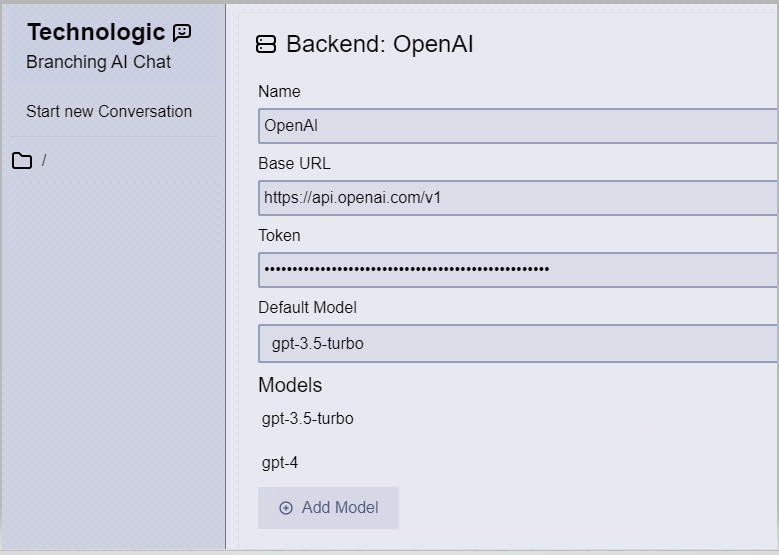

Before you start using the chat application, you need to enter your OpenAI API key in the app’s settings.

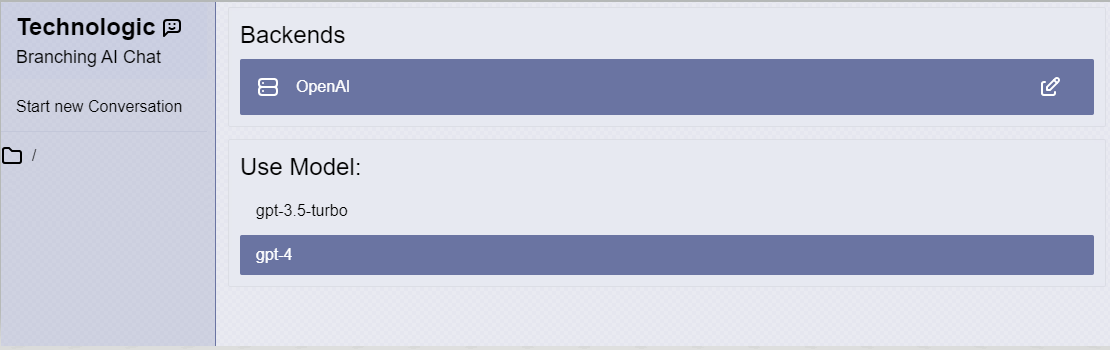

Optionally, you can adjust the Completions Model to be used in the settings. By default, the model is set to “gpt-3.5-turbo”, but if you already have GPT-4 access through the API (so far, you had to get on the waiting list for this), you can also use GPT-4.

To make “Technologic - AI Chat” easily accessible for non-techies in your organization, you can host it in your own intranet, just like we did.

To do this, use npm run build to create a “build” directory.

This directory contains all necessary files, which you can then upload to your server to deploy the app within your company network.

This way, all team members can access the chat application and use it for their tasks.

Conclusion

In this article, we have dealt with the tricky topics of data protection and teamwork when it comes to using ChatGPT in a company setting.

With the combination of OpenAI API and Technologic frontend, we have found a solution that allows all colleagues to work under a common company account, and each person can have their own chat with history, while all data remains securely stored locally. This keeps sensitive information safe and prevents unauthorized sharing. This solution has helped us work efficiently and with data protection in mind.

We hope that our experiences can also help other companies leverage the potential of AI-based communication platforms like ChatGPT without compromising on data protection.