The five most common mistakes with Neural networks

AI and especially Neural Networks or Deep Learning have been the technological hype topic for some years now. However, since the subject is quite abstract – one could say it is uncharted territory for most people – we want to clear up some mistakes that we often encounter in our work.

1. “Neural networks are artificial brains built with artificial neurons.”

A lot of the mistrust with which neural networks are perceived in public and relevant media is based precisely on this assumption: The fear that man’s greatest asset, the brain, could be decoded to such an extent that it could simply be reproduced. Nevertheless, this statement is plain nonsense.

In fact, artificial neurons are mathematical formulas inspired by very simple, outdated models of “real” neurons. Real neurons are significantly more complex. In addition, there are not only much more of them in a brain than in an artificial neural network (ANN). There are also very, very many different types of biological neurons in the brain – unlike in an ANN.

But what is true is that both ANN and biological neural networks share a common construction principle: Functionality results from the interaction of numerous but very simple interconnected components (This principle is known as “connectivism”). This stands in stark contrast to, for example, a simple chess AI, which gradually processes a comparatively complex algorithm for which – as far as we know – there is no equivalent in the human brain.

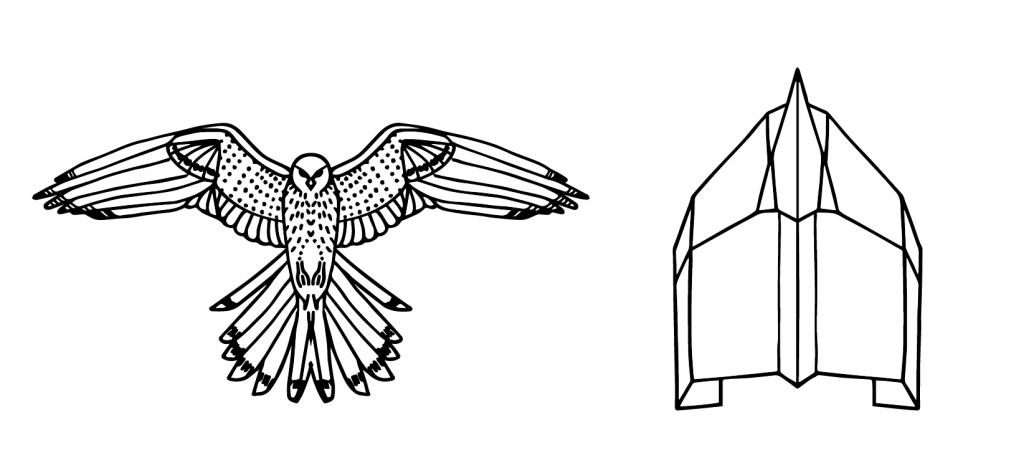

You can think of this as with birds and airplanes: Both are subject to the laws of aerodynamics and have wings, so they do share some basic, general similarities, but they are also very different. Since we are, despite the progress made in recent years, still at the beginning of this technology, it is a bit like a hawk and a paper airplane. The paper airplane can fly short distances. If you throw it in the right direction it will land roughly where you want it to – but it is far from the flying abilities of a falcon. This is the same with ANNs and the human brain.

2. “Artificial neural networks are growing.”

A very common misconception. ANN are strictly defined in their structure during programming, only the parameters of the mathematical formulas from which they are constructed vary. So nothing grows or shrinks during “learning”, nor do the structures change.

3. “Artificial neural networks continue to learn on their own“

In point 1 we have stated that KNN are not artificial brains. However, it is precisely this overly close comparison that leads people to think that neural networks learn independently, just as we humans normally do throughout our lives.

It is true that ANN as well as all other ML methods have exactly two modes: There is a training mode and an inference (prediction) mode. Only during training mode will a neural network learn, and then only when it is fed with the appropriate data in exactly the right way. So an image recognition software is able to flawlessly distinguish dogs from cats. But it will not start to detect humans by itself. Or accidentally take over the world like Skynet in Terminator. Besides, the training mode is usually not available in a network used e.g. in a mobile phone – further learning is mathematically impossible.

4. “Neural networks are a brand new technology”

Even if ANNs have come to the attention of the public in the last 15 years: Neural networks have existed almost as long as computer science, in fact since 1958. So they are actually old hat. However, algorithmic developments, a lot more accessible data and greater computing power have only made their practical application commercially viable in recent years.

5. “Neural networks are real AI”

People who use the term “real AI” usually mean a general artificial intelligence. The idea is a human-created artificial intelligence with everything that we consider to be essential: A consciousness, general world knowledge and the ability to reflect and abstract. This is where the limitations of present-day ANN start to show.

Neural networks still have the same problems as other machine learning methods. They are total idiots who have no idea what they are doing. Of course, they are closer to a natural intelligence in our perception than, for example, a manually created decision tree or a chess computer. But that doesn’t make them any more “real” than other methods.

True enough: ANN today allows us to solve a lot of problems that were science fiction for a computer 20 years ago. But humans – or even animal – minds and ANNs (even if it comes from Google or Facebook) are still worlds apart, and this will remain so for decades, centuries or even millennia. So far we still have not understood how the human brain “generates” intelligence – so we cannot yet replicate it.