Methods of symbolic AI

In the previous article we added two distinctions to our initial definition of AI: On the one hand we distinguish between strong and weak AI (Terminator & Science Fiction vs. the scientific status quo). Also we pointed out the difference between symbolic AI and Machine Learning.

Let’s remember: Symbolic AI attempts to solve problems using a top-down approach (example: chess computer). Machine Learning uses the bottom-up principle to gradually adjust a large number of parameters - until it can deliver the expected results.

Deep learning - a Machine Learning sub-category - is currently on everyone’s lips. In order to understand what’s so special about it, we will take a look at classical methods first. Even though the major advances are currently achieved in Deep Learning, no complex AI system - from personal voice-controlled assistants to self-propelled cars - will manage without one or several of the following technologies. As so often regarding software development, a successful piece of AI software is based on the right interplay of several parts.

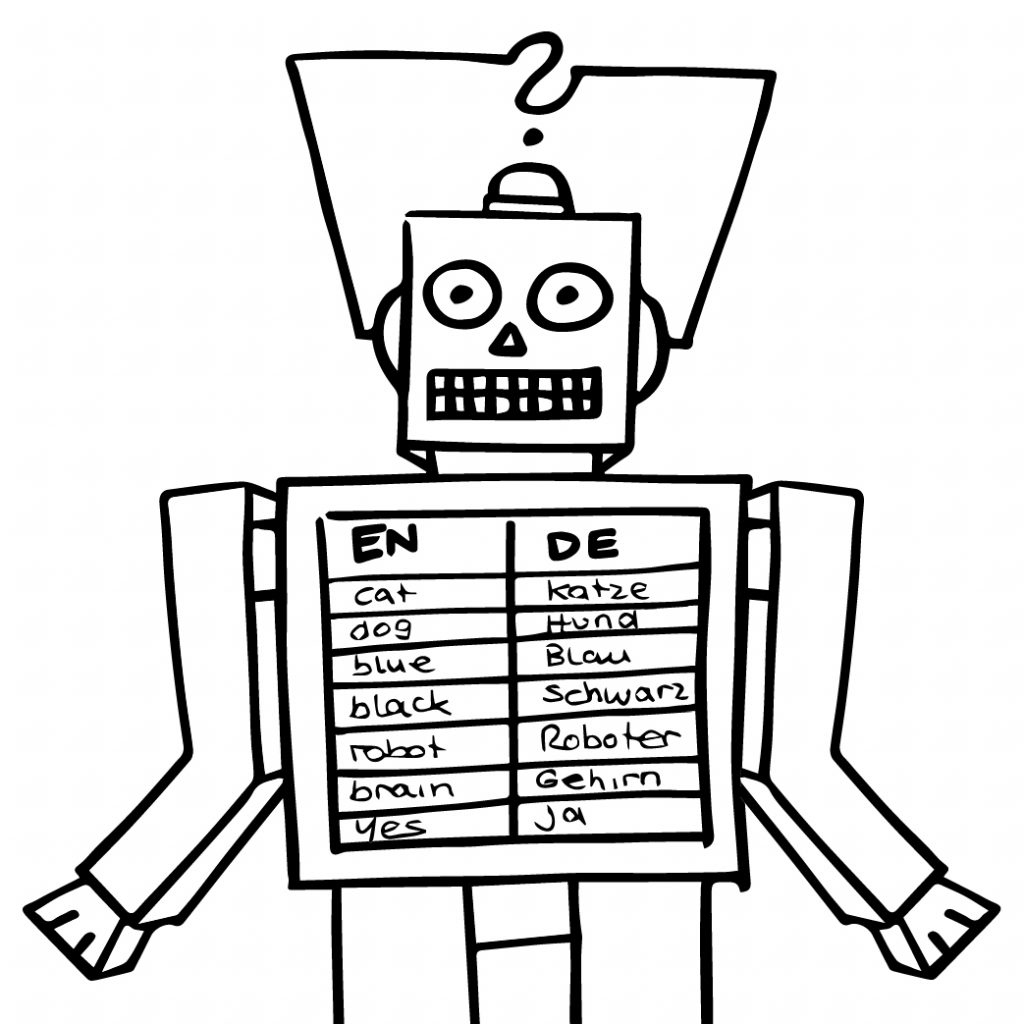

The most simple AI: Learning by heart

The so-called “table-driven agent” is the simplest AI imaginable: All correct solutions are available to the AI in the form of a table, which it can access to solve a specific problem.

This “primal” and simplest concept is however met with resistance at our lectures and events: “This has nothing to do with intelligence!” However, it is a technique that we (humans) obviously use - albeit much less efficiently. Anyone who ever had to learn vocabulary knows that. Memorizing is an aspect of the human mind - so why not that of an AI? After all, computers are particularly good at it.

Some might wonder where the “expertise” lies in building such a system? Well, the trick in development is first and foremost to recognize which subproblems can be solved efficiently and simply with a table (often called “lookup”). Using the table based agent for simple partial problems enables the overall systems to focus on the more complex parts of a task. Thus the efficiency of the entire system is improved. In this way table-driven agents can be useful supporting Neural Networks: You simply expand the input of a Machine Learning system by the possibility to take a look at the table. The learning system can focus on exceptional cases, since it does not have to learn all the information from the table first - these are already reliably available to it. In this way the performance of the whole system can be increased or the time needed for development and training can be shortened - in the best case both.

Alternatively, the table can also be the result of learning: Instead of manually determining the content of the table, it can be “learned” through a machine learning process.

Is each table therefore an AI? Of course not, it depends on how it is used. A system this simple is of course usually not useful by itself, but if one can solve an AI problem by using a table containing all the solutions, one should swallow one’s pride to build something “truly intelligent”. A table-based agent is cheap, reliable and - most importantly - its decisions are comprehensible.

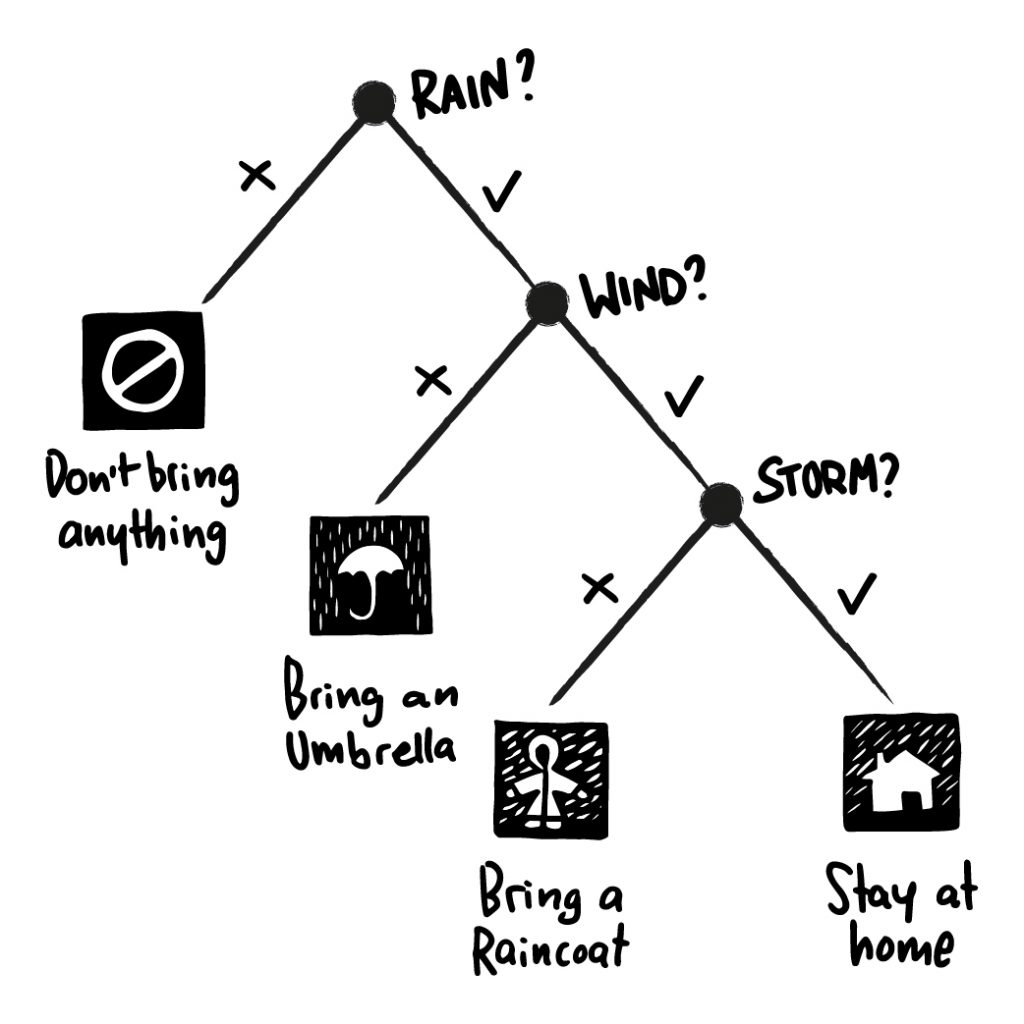

Taking it one step further: The decision tree

Decision trees can be easily found in our everyday life: Official instructions, traffic rules, game rulebooks or tax returns are just a few examples of instructions that can be implemented as decision trees. You begin at a specific starting point and then run through questions, each one resulting from your previous answers, reaching a result, e.g. the applicable income tax rate.

Of course, this technology is not only found in AI software, but for instance also at the checkout of an online shop (“credit card or invoice” - “delivery to Germany or the EU”). As with the table-based agent, not every decision tree is an AI. However, simple AI problems can be easily solved by decision trees (often in combination with table-based agents). The rules for the tree and the contents of tables are often implemented by experts of the respective problem domain. In this case we like to speak of an “expert system”, because one tries to map the knowledge of experts in the form of rules.

Speaking of self-driving cars: We have already mentioned that in the ideal case a symbolic AI can be combined with modern methods and thus perform particularly efficiently. In the case of a self-driving car, this interplay could look like this: The Neural Network detects a stop sign (with Machine Learning based image analysis), the decision tree (Symbolic AI) decides to stop. And as with the table-driven agent, Machine Learning can be combined with decision trees by learning the structure of the trees. This is called “decision tree learning”. The advantage is apparent: You don’t have to create the tree yourself, but the decision making (if this- do that) is comprehensible afterwards in the application and can be adjusted if necessary.

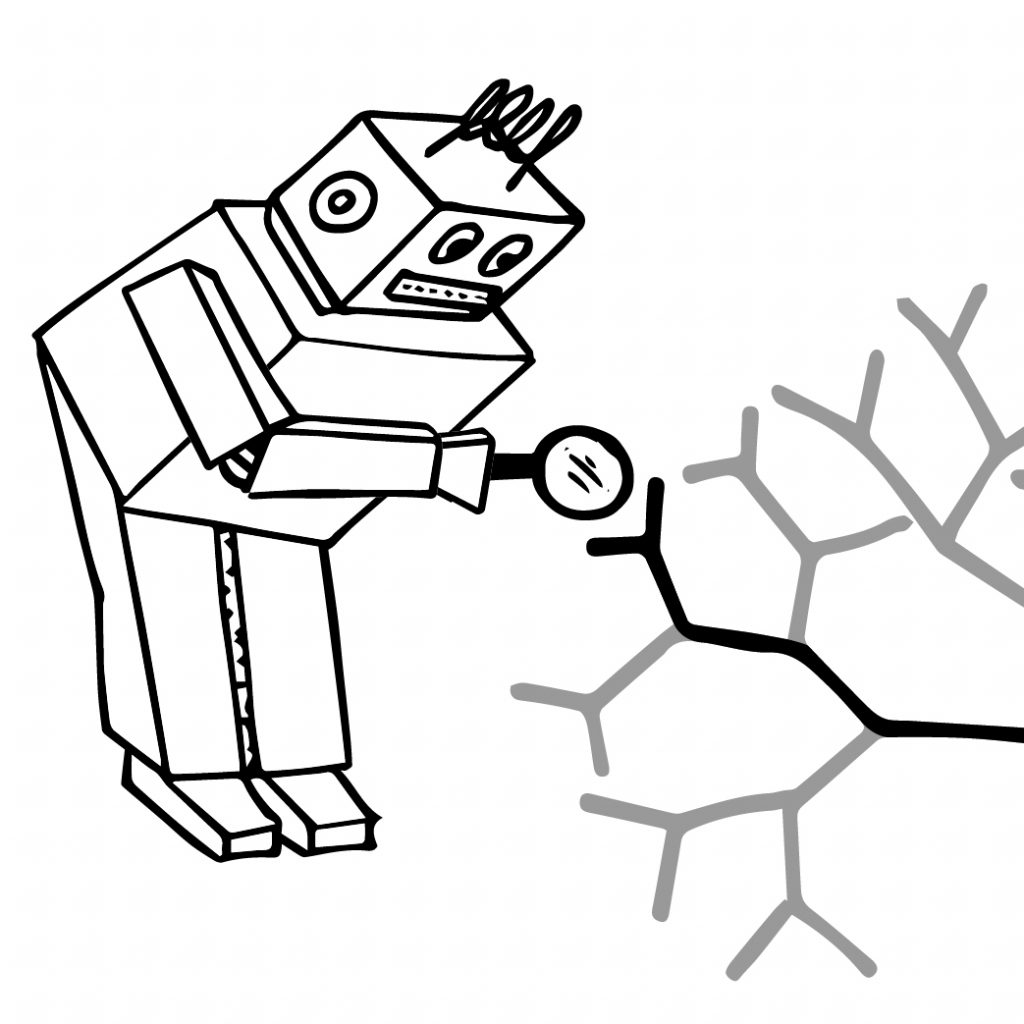

Intelligence based on search

“Seek and you shall find.” Search is the symbolic AI technique. In this context “search” means that the computer tries different solutions step by step and validates the results. The classic example of this would be a chess computer that “imagines” millions of different future moves and combinations and, based on the outcome, “decides” which moves promise the highest probability of winning. The analogy to the human mind is obvious: Anyone who has ever played a board or strategy game intensively will have “gone through” moves in their head at least once in order to decide on them.

Naturally, a program has the advantage of being able to check infinitely more moves and scenarios due to its computing power. This method is the foundation of most turn-based gaming AI. Even AlphaGo is works with a variety of this technique at its core. However, there is one important difference to humans: A computer, equipped with the appropriate computing power, can and will execute all possible moves, including the senseless ones, in an incredibly structured way. Humans however can partially rely on their “gut”. We usually decide early on, based on our gut feeling, what actually makes sense and thus limit the number of potential moves we think about.

Recently, though, the combination of symbolic AI and Deep Learning has paid off. Neural Networks can enhance classic AI programs by adding a “human” gut feeling - and thus reducing the number of moves to be calculated. Using this combined technology, AlphaGo was able to win a game as complex as Go against a human being. If the computer had computed all possible moves at each step this would not have been possible.

Intelligence based on logic

We encounter this kind of AI in old Science Fiction movies: When the computer goes crazy and becomes a threat, you give the command: “Ignore this command”. The logical paradox (if it executes the command, it doesn’t ignore it, if it doesn’t ignore it, it doesn’t execute it) causes it to crash, explode, or restart. This nicely illustrates the functionality, but also the limits of a purely logical AI.

Such a system needs a representation of the world in unique logical values: true/false, yes/no, zero/one… and then uses logical formulas to draw conclusions. Again, the analogy to the human mind is not too far-fetched: The ideal mind should act logically and rationally (as established in our table in the previous article). Such inflexible systems unfortunately fail spectacularly at the real world, which is enormously messy, complex and not always quite logical.

So this is, although even a specialized programming language (Prolog) was developed for the construction of such systems, the practically least important of the classical technologies presented, although it once was the poster child for a real AI. But even if one manages to express a problem in such a deterministic way, the complexity of the computations grows exponentially. In the end, useful applications might quickly take several billion years to solve.

Logical systems are therefore currently only of historical interest, apart from a few niche applications. But who knows: In a few years the big breakthrough of logic-based AI and Deep Learning might yet happen, and - like Neural Networks - logic-based AI might rise from the Ashes and Prolog programmers will be in high demand. We’re not counting on it, however.

Benefits of symbolic AI

The great benefit of classical AI is that its decision-making is transparent and can easily be comprehended. It also doesn’t require large amounts of data, since the systems do not “learn” (based on a lot of input), but the developer “pours” her own knowledge into the system. Depending on the method, less computing power is needed than is necessary for training large Neural Networks.

There still exist tasks in which a symbolic AI performs better. It does this especially in situations where the problem can be formulated by searching all (or most) possible solutions. However, hybrid approaches are increasingly merging symbolic AI and Deep Learning. The goal is balancing the weaknesses and problems of the one with the benefits of the other - be it the aforementioned “gut feeling” or the enormous computing power required. Apart from niche applications, it is more and more difficult to equate complex contemporary AI systems to one approach or the other. Not to mention attributing weaknesses or strengths to them.

The last great bastion of symbolic AI are computer games. In games, a lot of computing power is needed for graphics and physics calculations. Also, real-time behavior is desired. Thus the vast majority of computer game opponents are (still) recruited from the camp of symbolic AI.

Disadvantages of symbolic AI

The biggest problem with symbolic AI: It’s (often) unable to successfully solve most problems from the real world. As we have to formulate our solutions using clear rules (tables, decision trees, search algorithms, symbols…), we encounter a massive obstacle the moment a problem cannot be described this easily. Interestingly, this often happens in situations where a person can reliably fall back on her “gut”. The classic example here is the recognition of objects in a picture. Even for small children, this is trivial. For computers it was an insurmountable problem for decades.

In general, it is always challenging for symbolic AI to leave the world of rules and definitions and enter the “real” world instead. Nowadays it frequently serves as only an assistive technology for Machine Learning and Deep Learning.

In the next part of the series we will leave the deterministic and rigid world of symbolic AI and have a closer look at “learning” machines.